Research Topics

(click on a specific category to see relevant works)- # Natural Language Processing

- # LLM

- # LLM Web Agent

- # Deep Learning

- # Interactive System

- # HCI

- # Creative Toolkit

- # Image & Video Understanding

- # AR / VR

- # Computer Graphics

- # A11Y

- # Computer Vision

- All

- Ongoing

- Published

- Preprint

- Past Works

graphiti: Sketch-based Graph Analytics for Images and Videos

(Accepted to CHI '22, acceptance rate: 12.5%) Doi | Code | Abstract

Dr. Nazmus Saquib, Faria Huq, Dr. Syed Arefinul Haque

Keywords: Sketching Interface, Embodied Mathematics, Graph Analytics,

Computer Graphics, Computer Vision, Image Processing

Graph and network analytics are mostly performed using a combination of symbolic expressions, code, and graph visualizations.

These different representations enable graph-oriented conceptualization, analytics, and presentation of relationships in networks.

While many visualization designs are implemented for visual understanding of graphs, they tend to be designed for custom

applications, and do not facilitate graph algebra. We define a design space of general graph analytics by summarizing

the commonly used graphical representations (graphs, simplicial complexes, and hypergraphs) and graph operations,

and map these elements to three brushes and some direct manipulation techniques.

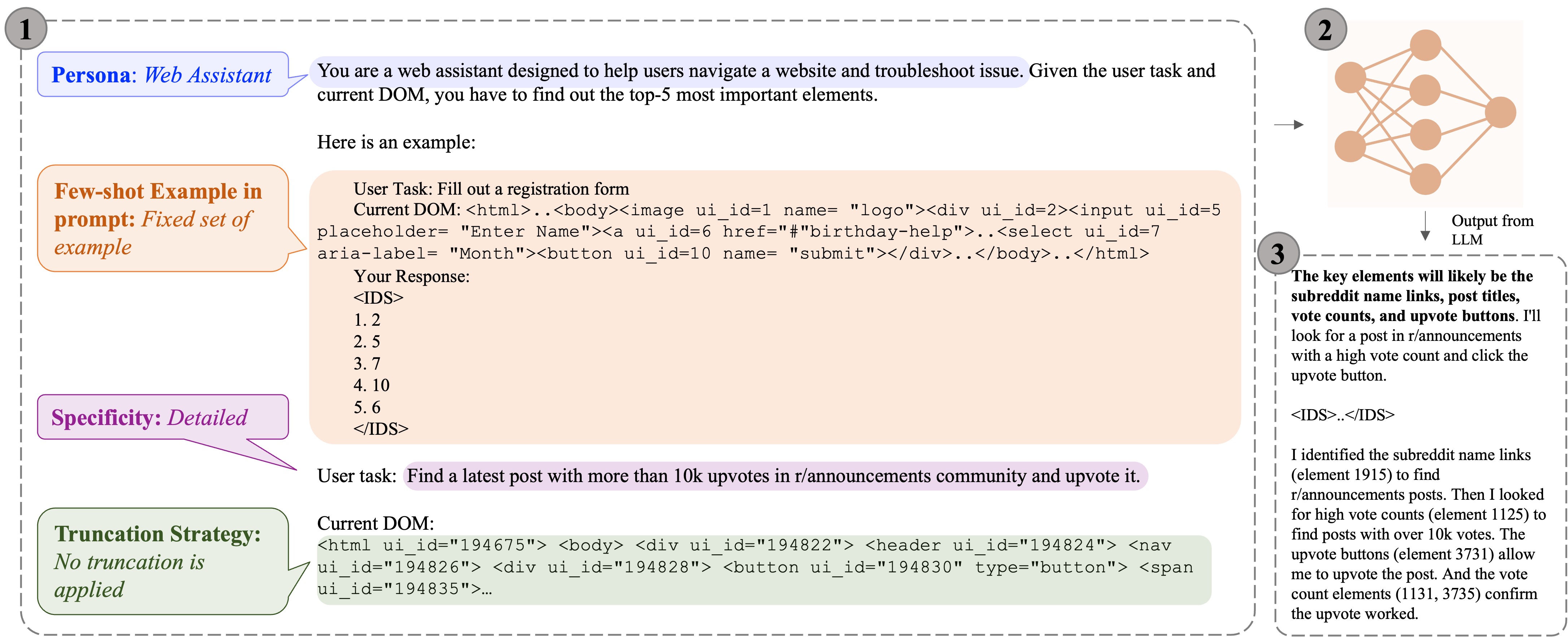

"What’s important here?": Opportunities and Challenges of Using LLMs in Retrieving Information from Web Interfaces

NeurIPS Workshop on Robustness of Foundation Models, 2023 PDF | Abstract

Faria Huq, Jeff Bigham, Nikolas Martelaro

Keywords: LLM, Prompt Tuning, Instruction Following, Web Interface

Large language models (LLMs) that have been trained on large corpus of codes exhibit a remarkable ability to understand HTML code. As web interfaces are mainly constructed using HTML, we design an in-depth study to see how the code understanding ability of LLMs can be used to retrieve and locate important elements for a user given query (i.e. task description) in web interface. In contrast with prior works, which primarily focused on autonomous web navigation, we decompose the problem as an even atomic operation - Can LLMs find out the important information in the web page for a user given query? This decomposition enables us to scrutinize the current capabilities of LLMs and uncover the opportunities and challenges they present. Our empirical experiments show that while LLMs exhibit a reasonable level of performance in retrieving important UI elements, there is still a substantial room for improvement. We hope our investigation will inspire follow-up works in overcoming the current challenges in this domain.

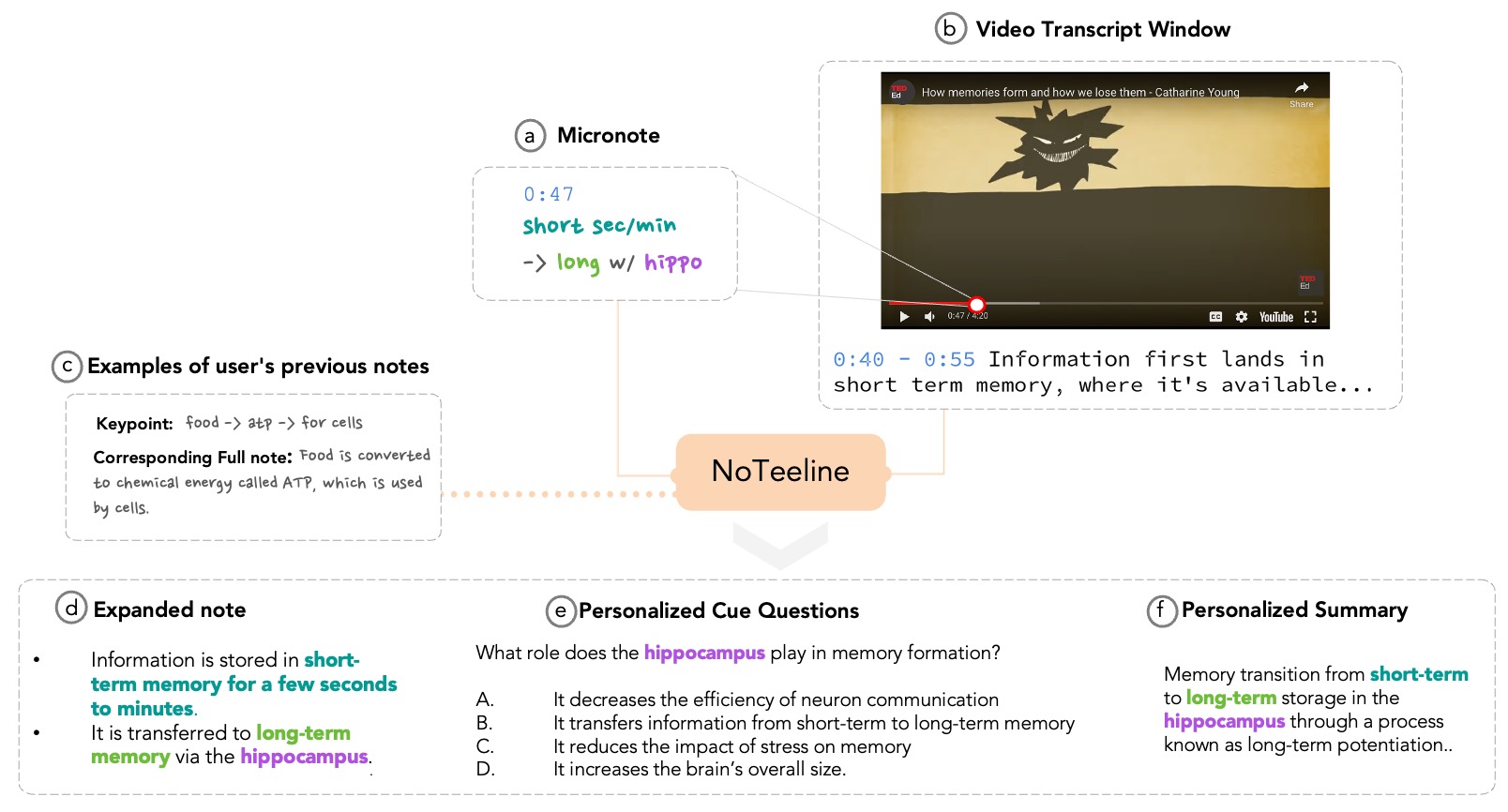

NoTeeline: Supporting Real-Time Notetaking from Keypoints with Large Language Models

Appearing in IUI '25

Faria Huq, Abdus Samee, David Chuan-En Lin, Alice Tang, Jeffrey Bigham

Keywords: LLM, Note Taking, Personalized Interaction for LLM

Video has become a popular media form for information sharing and consumption. However, taking notes while watching a video requires significant time and effort. To address this, we propose a novel interactive system, NoTeeline, for taking real-time, personalized notes. NoTeeline lets users quickly jot down key points (micronotes), which are automatically expanded into full-fledged notes that capture the content of the user's micronotes and mimic the user's writing style. In a within-subjects study (N=12), we found that NoTeeline helps users create high-quality notes that capture the essence of their micronotes with a low hallucination rate (6.8%) while accurately reflecting their writing style. While using NoTeeline, participants experienced significantly reduced mental effort, captured satisfactory notes while writing 47% less text, and completed notetaking with 43.9% less time compared to a manual notetaking baseline.

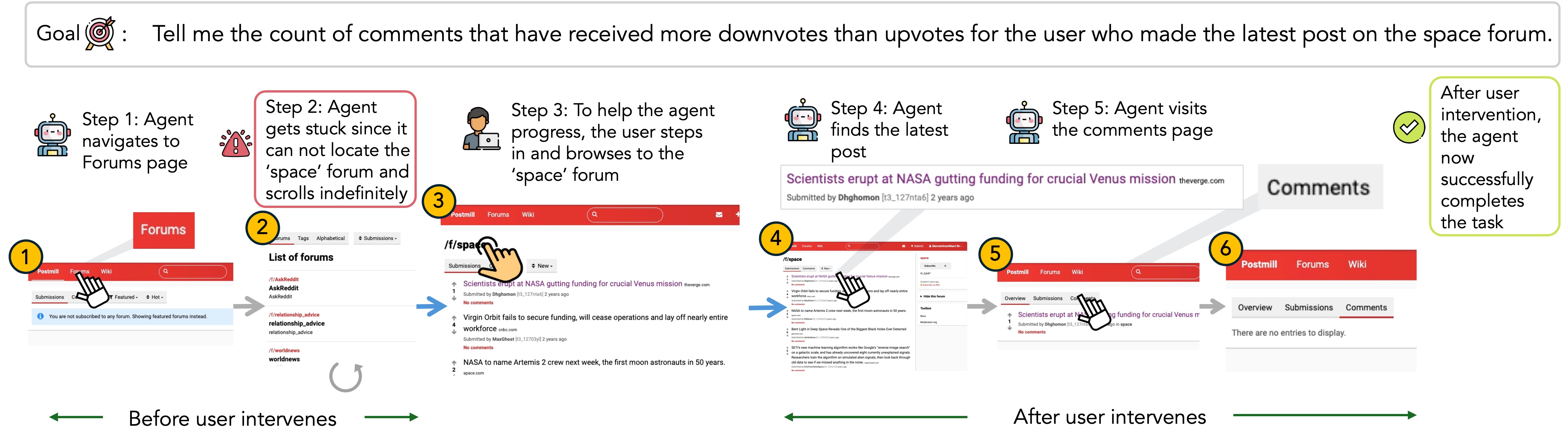

CowPilot: A Framework for Autonomous and Human-Agent Collaborative Web Navigation

Under Review in NAACL Demo Track '25

Faria Huq, Zora Zhiruo Wang, Frank F. Xu, Tianyue Ou, Shuyan Zhou, Jeffrey P. Bigham*, Graham Neubig*

Keywords: LLM, LLM Agent, Human-Agent Collaboration

Web agents capable of conducting web activities on user behalf have gained attention for their task automation potential. However, they often fall short on complex tasks in dynamic, real-world contexts, making it essential for users to work collaboratively with the agent. We present CowPilot, a framework supporting both autonomous and human-agent collaborative web navigation, and evaluation across task success, user experience, and task efficiency. CowPilot eases human effort by starting with agents proposing next steps, meanwhile allowing humans to execute, pause, or reject agent-proposed steps and take alternative actions instead; supporting seamless action-taking between agent and human participants. We conduct case studies on five websites and find that human-agent collaborative mode achieves the highest 95% success rate while requiring humans to perform only 15.2% of the total steps. Even with human interventions during task execution, the agent successfully drives up to half of task success on its own. CowPilot serves as a useful tool for data collection and agent evaluation across websites, which we hope will facilitate further advances.

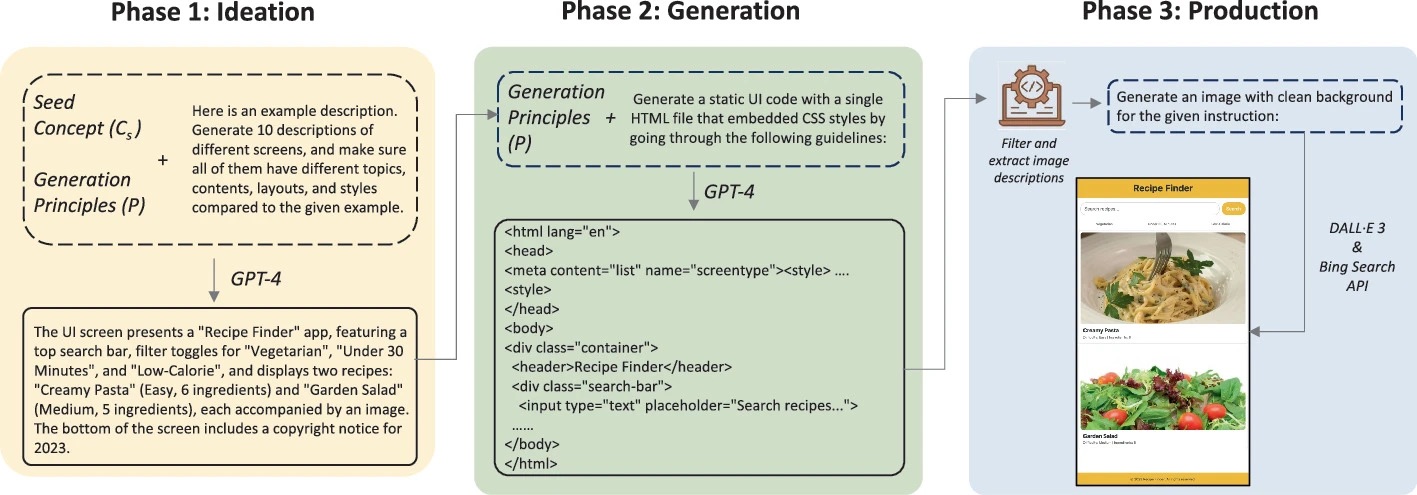

DreamStruct: Understanding Slides and UIs via Synthetic Data Generation

ECCV '24

YH Peng, Faria Huq, Y Jiang, J Wu, XY Li, JP Bigham, A Pavel.

Keywords: Synthetic Data, LLM, Visual Understanding

Enabling machines to understand structured visuals like slides and user interfaces is essential for making them accessible to people with disabilities. However, achieving such understanding computationally has required manual data collection and annotation, which is time-consuming and labor-intensive. To overcome this challenge, we present a method to generate synthetic, structured visuals with target labels using code generation. Our method allows people to create datasets with built-in labels and train models with a small number of human-annotated examples. We demonstrate performance improvements in three tasks for understanding slides and UIs: recognizing visual elements, describing visual content, and classifying visual content types

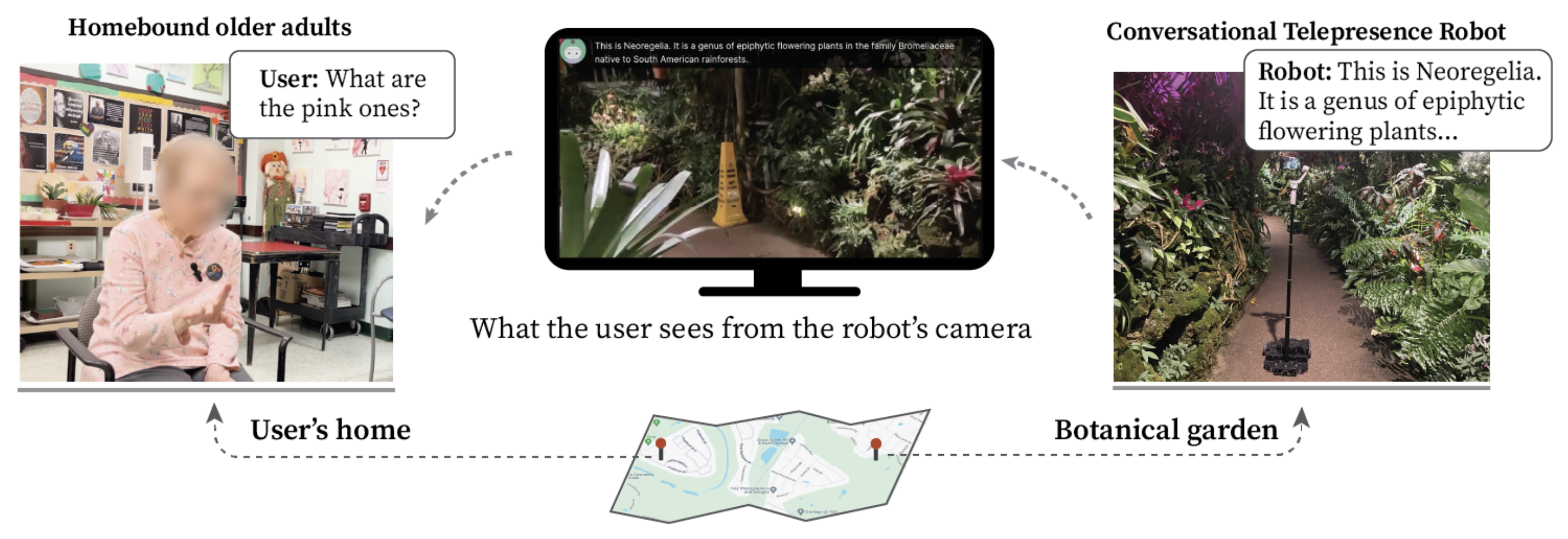

"This really lets us see the entire world:" Designing a conversational telepresence robot for homebound older adults

DIS '24

Yaxin Hu, Laura Stegner, Yasmine Kotturi, Caroline Zhang, Yi-Hao Peng, Faria Huq, Yuhang Zhao,Jeffrey P Bigham, Bilge Mutlu

Keywords: Older Adult, Accessibility, Human-Robot Interaction

In this paper, we explore the design and use of conversational telepresence robots to help homebound older adults interact with the external world. An initial needfinding study (N=8) using video vignettes revealed older adults’ experiential needs for robot-mediated remote experiences such as exploration, reminiscence and social participation. We then designed a prototype system to support these goals and conducted a technology probe study (N=11) to garner a deeper understanding of user preferences for remote experiences. The study revealed user interactive patterns in each desired experience, highlighting the need of robot guidance, social engagements with the robot and the remote bystanders. Our work identifies a novel design space where conversational telepresence robots can be used to foster meaningful interactions in the remote physical environment. We offer design insights into the robot’s proactive role in providing guidance and using dialogue to create personalized, contextualized and meaningful experiences.

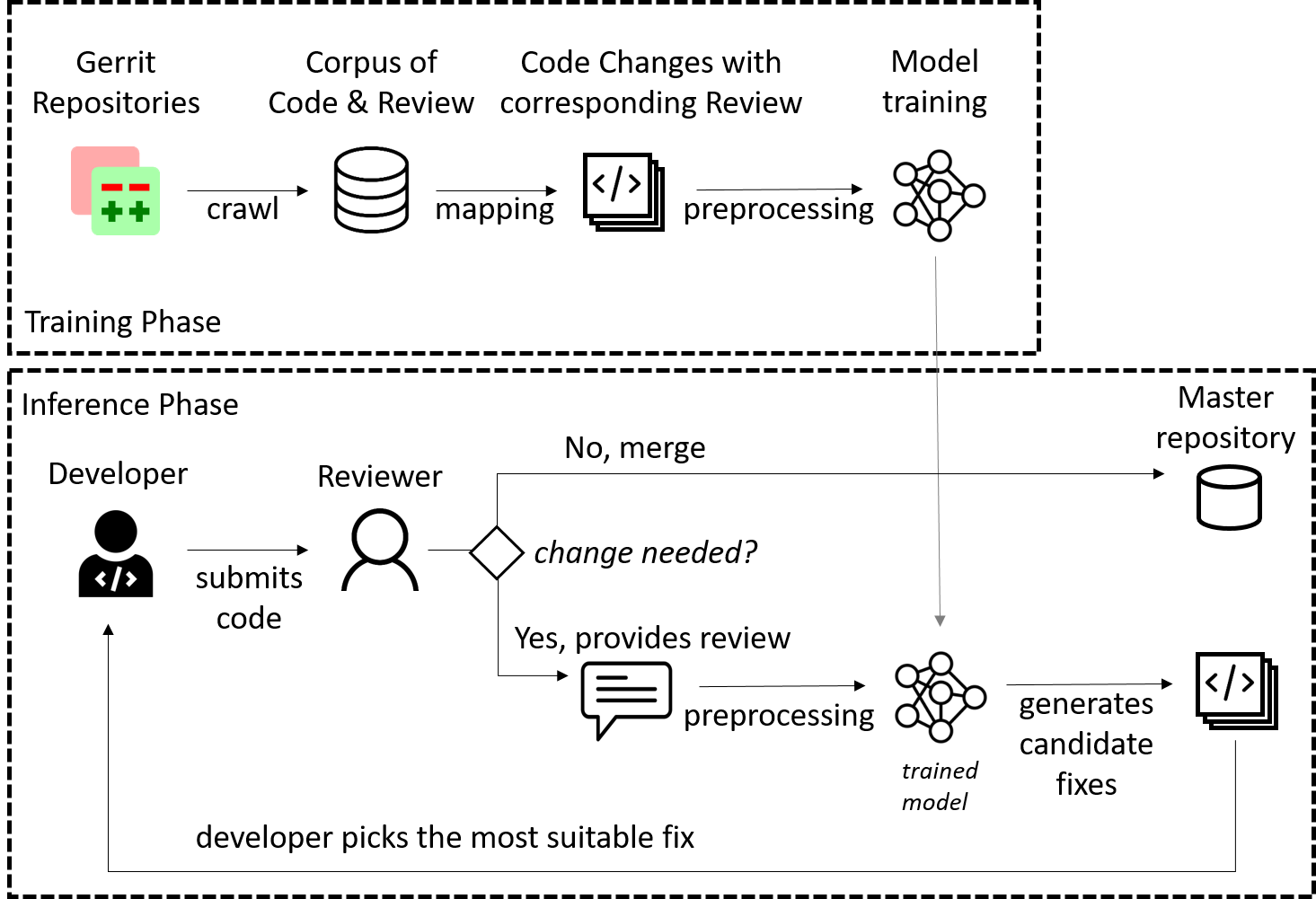

Review4Repair: Code Review Aided Automatic Program Repairing

(Published in Information and Software Technology) Doi | Abstract

Faria Huq, Masum Hasan, Mahim Anzum Haque Pantho, Sazan Mahbub,

Prof. Anindya Iqbal, Toufique Ahmed

Keywords: Automatic Program Repair, Natural Language Processing

The natural language instructions scripted on the review comments are

enormous sources of information about code bug’s nature and expected solutions.

In this study, we investigate the performance improvement of repair techniques using code review comments.

We train a sequence-to-sequence model on 55,060 code reviews and

associated code changes. We also introduce new tokenization and preprocessing approaches

that help to achieve significant improvement over state-of-the-art

learning-based repair techniques. We boost the top-1 accuracy by 20.33% and top-10 accuracy by 34.82%.

We could provide a suggestion for stylistics and non-code errors unaddressed by

prior techniques.

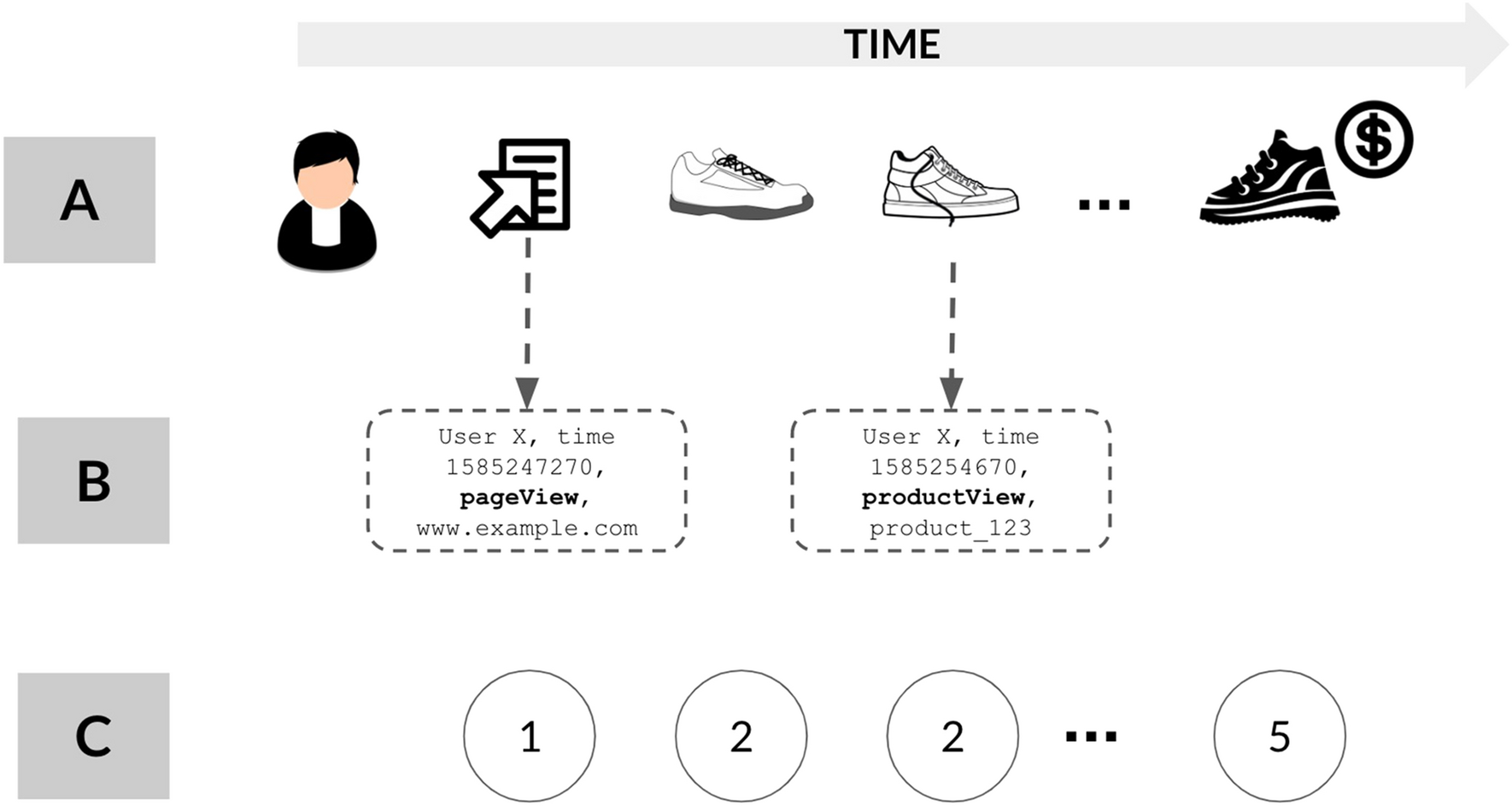

User Intention Prediction from Interaction Trace in Web Interfaces

Faria Huq

Keywords: LLM, Prompt Tuning, Instruction Following, Web Interface

Understanding user intention is an important subfield of HCI. For example, in search engines, intention prediction is important to understand what the user is looking for and which types of search results they prefer by analyzing their clicking behavior. Based on their intention, the search engine can recommend more relevant results in the top/ at a higher rank. Similarly, by predicting user intention for webpages through their interaction trace, we can uncover what the user is aiming to do, potentially suggesting automation execution of the remaining parts or providing just-in-time intervention when they seem to be struggling to find the information they are looking for. To this end, we aim to predict user intention from their interaction trace with two objectives:

1) what is their actual goal that they are trying to achieve now?

2) what is the future trajectory of actions the user is likely to execute?

Riemannian Functional Map Synchronization for Probabilistic Partial Correspondence in Shape Networks

Preprint | Blog | Abstract

Faria Huq

, Adrish Dey, Sahra Yusuf,

Dr. Dena Bazazian, Dr. Tolga Birdal, Prof. Nina Miolane

Keywords: Riemannian Geometry, Shape Correspondence,

Computer Graphics, Geometry Processing

This project is about probabilistic correspondence synchronization, a state of the art technique in multi-way matching of a collection

of 3D shapes usually represented as nodes in a graph.

In particular, we will model correspondences via functional maps and build upon the prior work on permutation synchronization

Static and Animated 3D Scene Generation from Free-form Text Descriptions

Faria Huq, Prof. Anindya Iqbal, Nafees Ahmed

Keywords: Natural Language Processing, Computer Graphics

Generating coherent and useful image/video scenes from a free-form textual description is technically a

very difficult problem to handle. Textual description of the same scene can vary greatly from person to person,

or sometimes even for the same person from time to time. As the choice of words and syntax vary while preparing a

textual description, it is challenging for the system to reliably produce a consistently desirable output from

different forms of language input. In our work, we study

a new pipeline that aims to generate static as well as animated 3D scenes from different types of free-form textual

scene description without any major restriction. Our work shows a proof

of concept of one approach towards solving the problem, and we believe with enough training data, the same pipeline can

be expanded to handle even broader set of 3D scene generation problems.

A Tale on Abuse and Its Detection over Online Platforms, Especially over Emails: From the Context of Bangladesh

(Accepted in NSysS' 21, acceptance rate: 16.67%)

Best Paper Award |

Code |

Abstract

Ishita Haque, Rudaiba Adnin, Sadia Afroz, Faria Huq, Sazan Mahbub, Prof. A. B. M. Alim Al Islam

Keywords: Interactive System, Natural Language Processing

With the rise of interactive online platforms, online abuse is becoming more and more prevalent. To gain rich insights

on the user’s experience with abusive behaviors over emailing and other online platforms,

we conducted a semi-structured interview with our participants. Through our user studies, we confirm a noteworthy

demand to explore abuse detection over emails. Here, we

reveal a clear preference from the users for an automated abuse detection system over a human-moderator based

system. These findings, along with the existing limited effort for abusive behavior detection and prevention

systems for emails inspire us to design and build "Citadel", which is a fully automated abuse detection system in

the form of a Chrome extension.

Self-similarity loss for shape descriptor learning in correspondence problems

Short Description

Faria Huq, Kinjal Parikh, Lucas Valenca, Dr. Tal Shnitzer-Dery

Expected date of completion: February, 2022

Keywords: Deep Learning, Self-supervised Learning

Recent work on shape correspondence using functional maps developed several unsupervised frameworks

for learning better shape descriptors for correspondence. One of the challenges in such shape

correspondence tasks stems from symmetric ambiguity, where different shape regions are represented

similarly due to symmetry and are therefore wrongly matched. In an attempt to address this challenge,

we will explore the use of a recently introduced contextual loss function.

Anisotropic Schrödinger Bridges

AbstractShort Description

Faria Huq, Jonathan Mousley, Juan Atehortúa, Adrish Dey, Prof. Justin Solomon

Expected date of completion: February, 2022

Keywords: Optimal Transport, Anisotropic Diffusion, Schrödinger Bridges

In this project, we mplemented a discrete Schrödinger bridge model for anisotropic heat diffusion, biasing it to move along different paths on the surface, targeting

applications in geometry processing. Currently, we are trying to optimize for the anisotropy

of our operator to add constraints on the transport problem.

Embodied Vector Algebra

Faria Huq, Dr. Nazmus Saquib

Expected date of completion: May, 2022

Keywords: Sketching Interface, Embodied Mathematics, Vector Analytics, Computer Graphics

This is a work in progress. So far we have developed the basic interactions for drawing and modifying vectors.

Currently we are working on the implementation of basic vector functions.

Chameleon User Interface

Abstract

Faria Huq, Z, Ron, Rita, Prof. David Lindlbauer

Keywords: Mixed Reality, Geometry Processing

Expected date of completion: February, 2022

Mixed Reality enables virtual interfaces to be placed at arbitrary locations in users’

environments, and with nearly arbitrary appearance. This can lead to challenges in designing

user interfaces for Mixed Reality. On the one hand, if all user interface elements are shown to users,

MR systems become hard to use because of visual clutter. On the other hand, if virtual interface elements

are hidden, users have to constantly access them through menus and other cumbersome interactions.

The goal of this project is to develop an approach that enables virtual interface elements to be

constantly visible without introducing visual clutter and distraction. This is achieved by adapting

the appearance of virtual interface elements based on their surrounding space. If a virtual interface

element is placed next to a couch with round edges and colorful fabric, the interface elements should adapt

a comparable appearance. That way, the interface element blends into the environment, but remains visible and

accessible for users.

Novel View Synthesis from blurred image

Faria Huq, Prof. Anindya Iqbal, Nafees Ahmed

Expected date of completion: March, 2022

Keywords: Neural Rendering, View Synthesis, Image Deblurring

We aim to synthesize a target image with an arbitrary target camera pose (novel view synthesis) from given a source

image of a dynamic scene containing motion blur and its camera pose.

Our key insight is to utilize neural rendering to jointly remove motion blur artifact using

deblurring technique and synthesize novel views from high-dimensional spatial feature vectors.

We are using Stereo Blur Dataset for our experimental analysis.

Real-world Anomaly Detection in Surveillance Videos by Analyzing Human Pose and Motion

Abstract